Table of Contents

Overview

Manage your campaigns

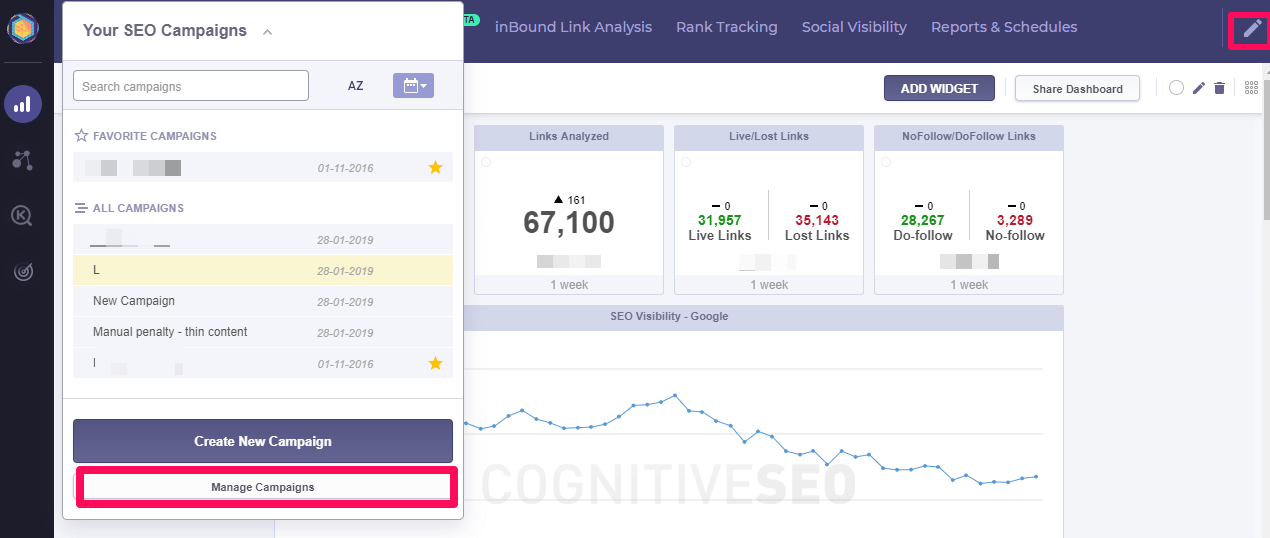

When you enter into your account, on the top of the dashboard you will find several features from which you can choose. You can manage your campaigns just by hitting the Manage Campaigns button that you can find on the top of the page.

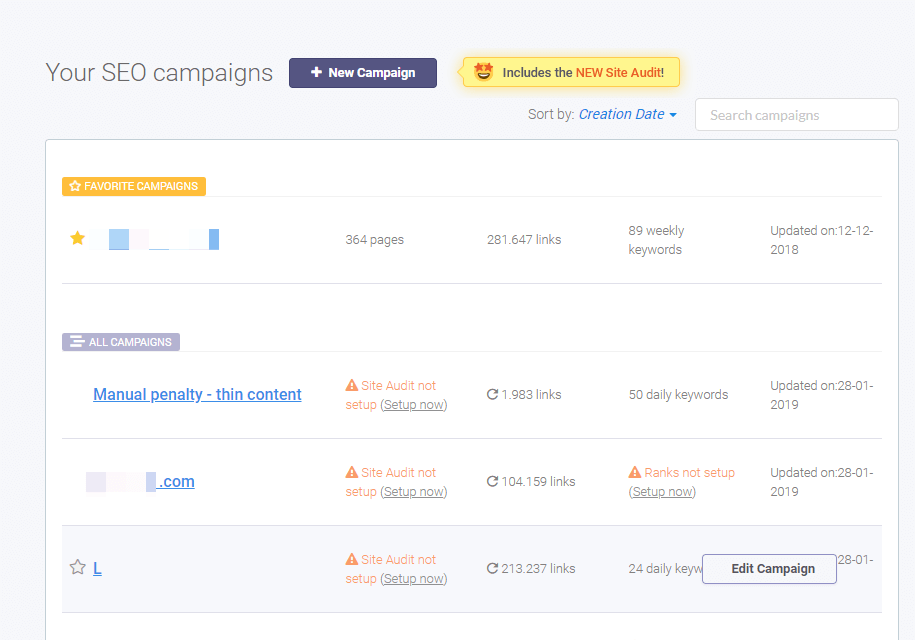

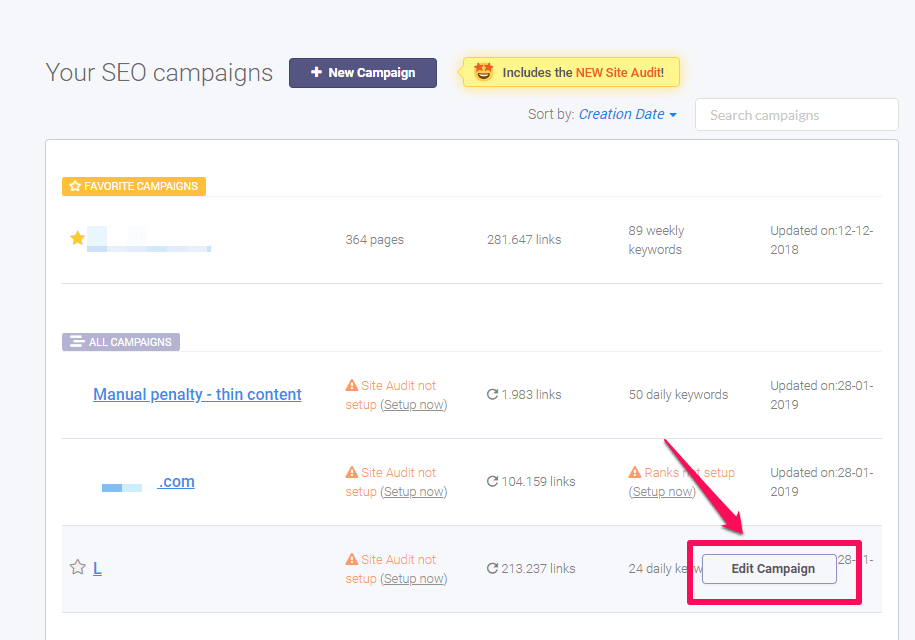

Selecting a campaign

You have multiple choices to select a campaign. The easiest way would be to do it straight from the SEO Dashboard. All the campaigns are listed on your page. Hover over the campaign you want to change.

Editing a campaign

If you want to edit your campaign, just press the Edit button. Once you’ve hit this button, you’ll have several options for editing your campaign. All your changes will be processed only after you press the Apply changes button.

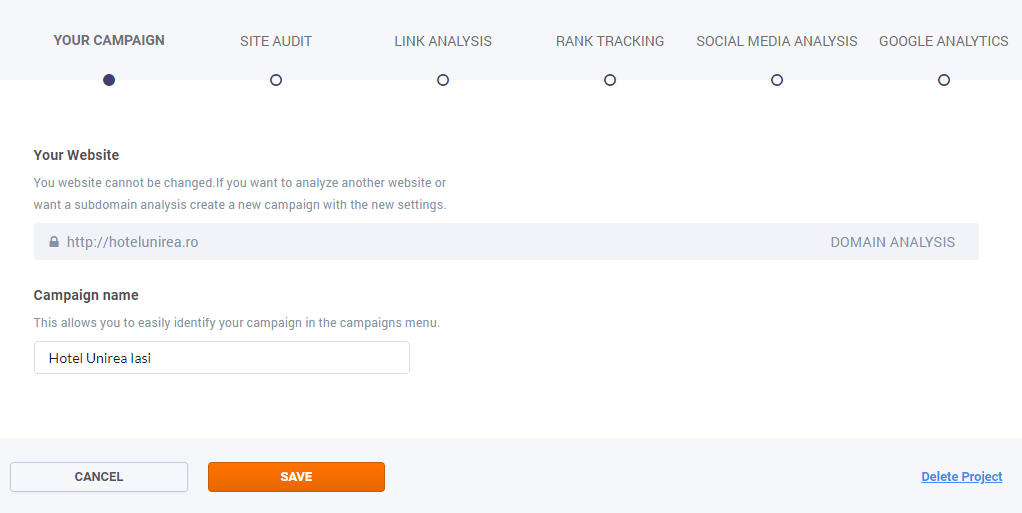

1. Your website

Your website category, from the left sidebar menu, allows you to see information about the site you chose to analyze. Once registered into the campaign, your website cannot be changed or deleted.

2. Site Audit

The Campaign editor allows you to change the settings for your website. There are the next available options:

- the number of crawled pages: 100 – unlimited;

- the time needed for crawling: 10 URLs/min – 30 URLs/min;

- the desired elements for crawling

- the recralwed period.

Deciding what to crawl can be a little be tricky. There are lots of elements of that you can choose from:

- Internal sub-domains have the next format: subdomain.example.com. One common example of subdomains is blog.example.com. If you want to analyze and crawl the subdomains that you have (in case you do) you need to select the box.

- Non-HTML files are image files, PDFs, Microsoft Office or OpenDocument files, and audio or video files stored on the web server.

- CSS files are used to define a style for a single HTML page. CSS stands for Cascading Style Sheet and is used by web pages to help keep information in the proper display format.

- External links are links that point out to another website. For example, other websites/URLs linked to your website are external links. They send the visitor from your site to another site.

- Images. Here are included all the images in different formats, that are added to your website’s media library.

- Nofollow links have the nofollow tag that indicates to search engines that the links added on a page shouldn’t be followed by them.

- Noindex pages are pages blocked by the webmaster to appear in search engines. All the pages that have a noindex meta tag in the page’s HTML code, or by returning a ‘noindex’ header in the HTTP request can be crawled by the Site Audit if you select the box.

- JavaScript files contain all the HTML HEAD and BODY objects in the tags of an HTML page.

.

Advanced Options

There are Advanced Options for having a more accurate analysis of a website.

.

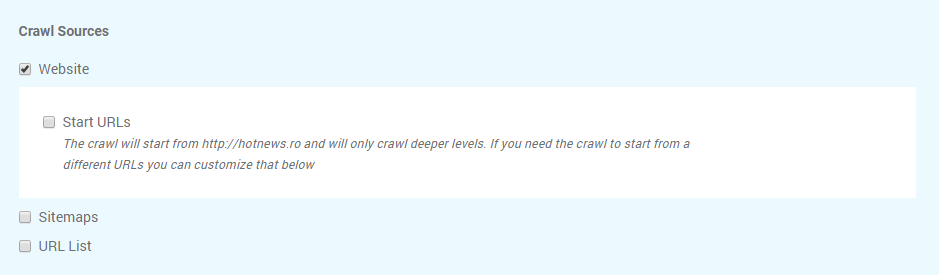

- Crawl Sources

The default setting for cognitiveSEO is to crawl your website. There are two other options available, so it can be configured to also crawl XML Sitemap URLs, and/or a provided URL List.

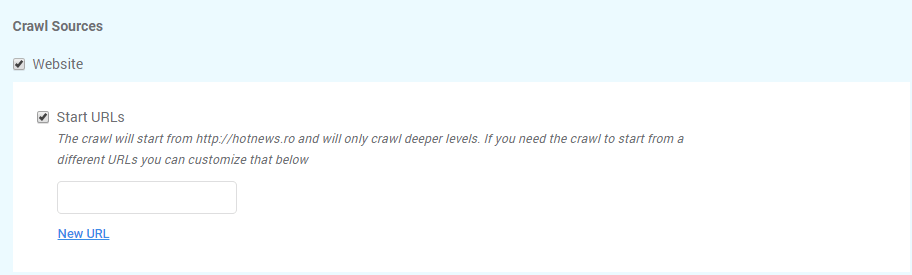

Selecting the Crawl website option the tool will crawl all web pages to discover new URLs until every page on the website is crawled. In case you want to crawl pages that start with a different URL you can choose that by selecting Start URLs.

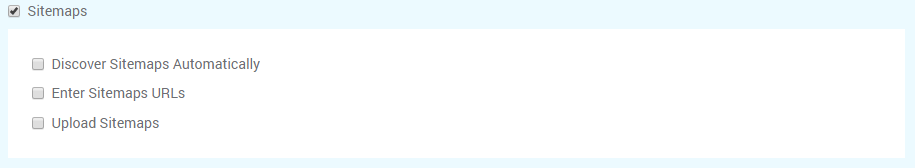

cognitiveSEO has the option to Crawl Sitemaps, which allows you to have a more in-depth audit. Please make sure that after you select the box for Sitemaps you chose the desired option for including the Sitemap in the Site Audit. You can either let the tool discover it automatically, enter the URL or uploaded from your computer.

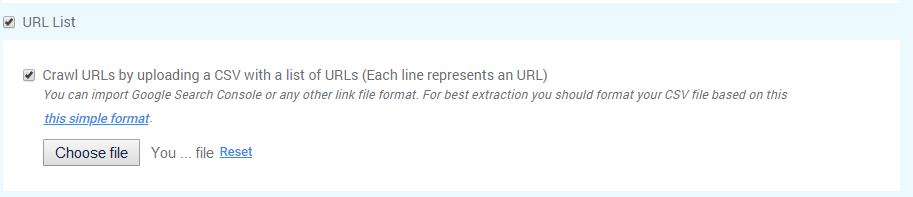

The third option, Crawl URL list can be performed by uploading a saved file from your computer. Make sure you select both boxes to save your options.

Typically URL Lists are used when you DON’T also crawl the website and are used to crawl a specific area or section of the site.

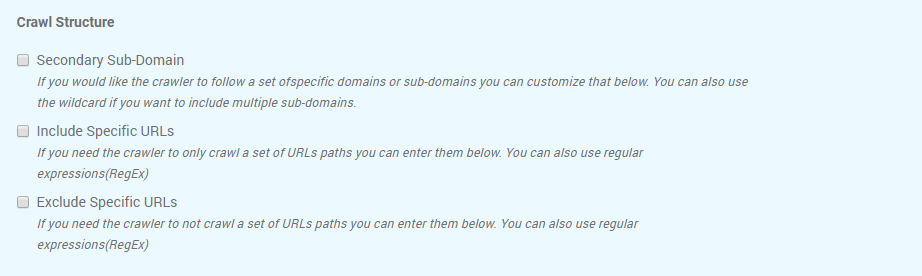

- Crawl Structure

The Crawl structure has other options for crawling website’s data from specific sources, such as secondary sub-domain, or specific URL path. In case there are URLs that you don’t want to crawl, you can select the last box and let the tool know it should crawl all the links expect the selected path.

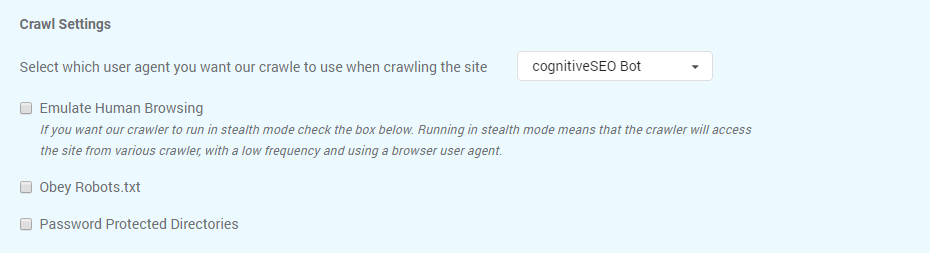

- Crawl Settings

Select the settings that fit you for the website you want to audit, by looking at Crawl Settings and decide the method used for crawling.

You can change the user agent for crawling from the list available on cognitiveSEO:

- Applebot

- Bingbot

- Bingbot Mobile

- Chrome

- cognitiveSEO Bot

- Firefox

- Generic

- Google Web Preview

- Googlebot

- Googlebot – Image

- Googlebot – Mobile Feature Phone

- Googlebot – News

- Googlebot – Smartphone

- Googlebot – Video

- Internet Explorer 8

- Internet Explorer 6

- iPhone

The Emulate Human Browsing option means that the crawler will access the site from various crawler, with a low frequency and using a browser user agent following a natural user behavior.

A robots.txt file is used to issue instructions to robots on what URLs can be crawled on a website. If you decide to Obey Robots.txt then the all the URLs will be crawled according to the robots.txt file.

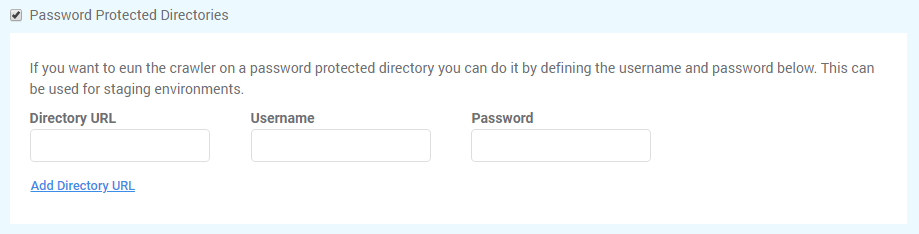

Use Password Protected Directories if you to run the crawler on a password protected directory you can do it by defining the username and password below. This can be used for staging environments.

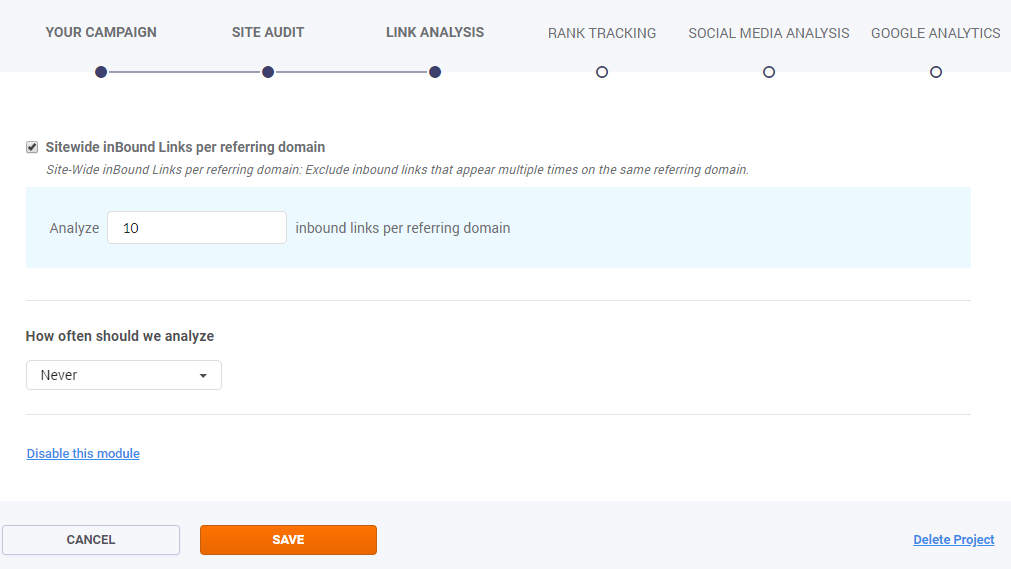

3. Link Analysis

On the Link Analysis category you can choose to analyze a range from 1 to 20 Side-Wide inBound Links per referring domain. This analysis will exclude inbound links that appear multiple times on the same referring domain.

You can also choose to exclude all internal links that are coming from various sub-domains of the main analyzed domain. For instance, if you entered domain.com, all the inBound links coming from www.domain.com or sub.domain.com will be excluded from the analysis.

You have also the option to disable this module in case you don’t want to keep track of the link data of the website.

4. Rank Tracking

You have the option to track keywords of the subdomain for the entered website, to change the tracking on daily or weekly or disable the module.

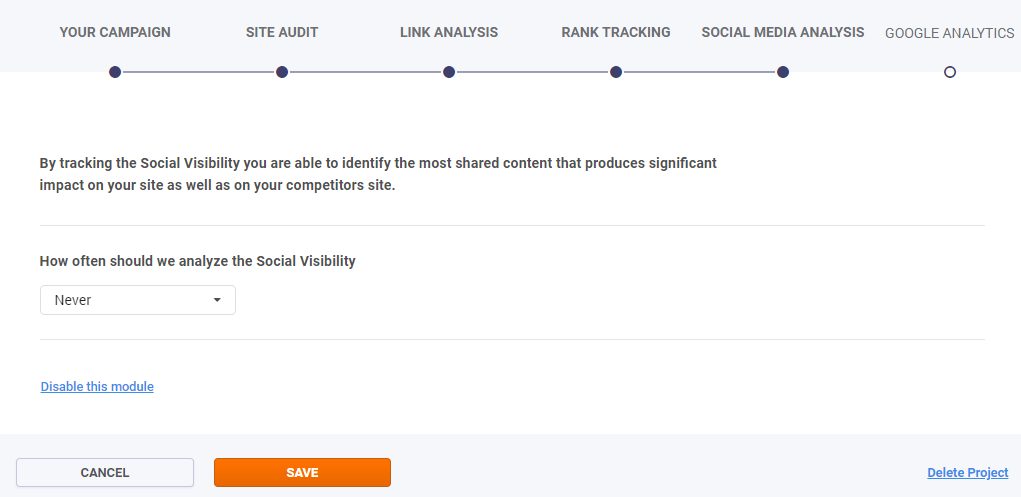

You can edit the information tracked regarding the social signals for the analyzed website. There is the option to track social activity weekly, biweekly, monthly or never. In case you want to stop receiving data regarding this module click on Disable this module.

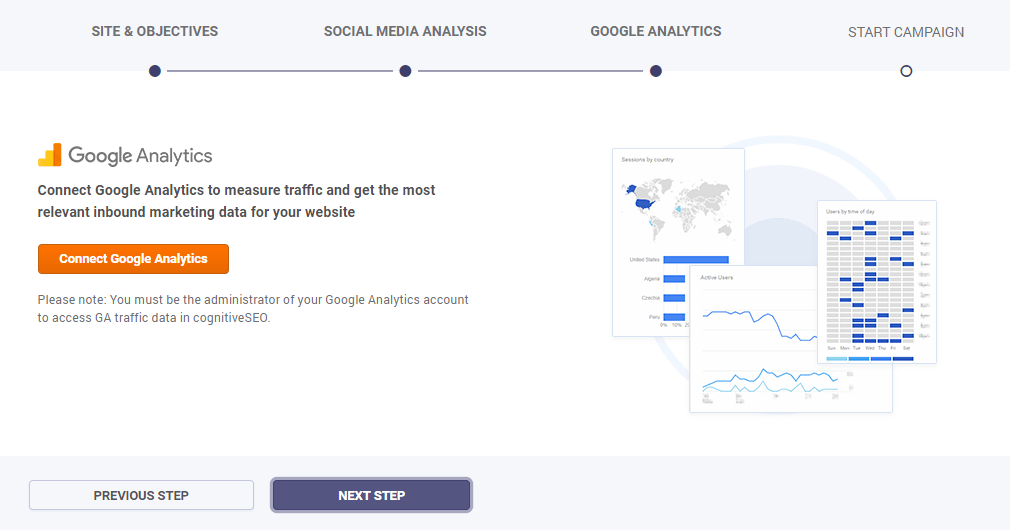

6. Google Analytics

You can authorize your Google Analytics account to measure traffic and get the most relevant inbound marketing data for your website. Doing so, will give you extra information and can help you create better reports with the SEO data you get from the cognitiveSEO toolset. In case you’ve authorised an account you can delete or change it.

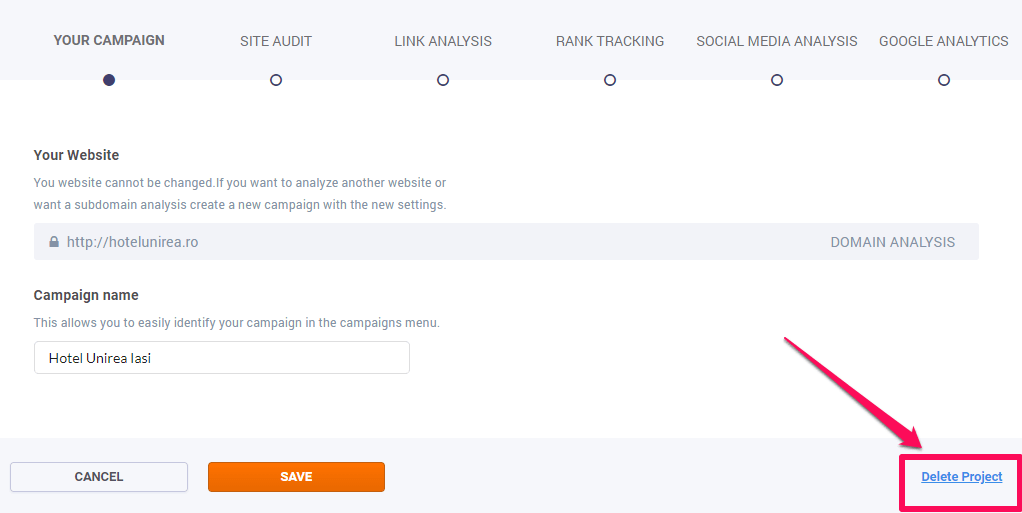

Deleting a campaign

You can delete your campaign by hitting the red Delete Campaign button. When you hit the delete button, a dialog box will ask you to confirm this operation by writing “confirm”. By confirming, you accept that all data will be lost. You need to know that deleting the campaign will not reset any used sites. The sites will be reset on your next billing day.

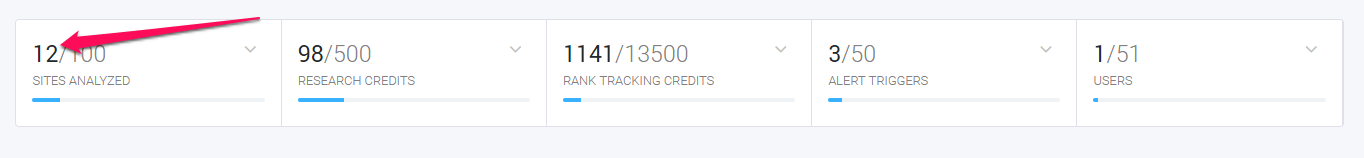

Number of locked sites

On the dashboard you will be informed about the number of sites remained to be analyzed in the current month. Based on your pricing plan, you have a certain number of sites assigned to you each month. The number below indicates you the number of sites you can still analyze, while the rest of the sites are locked for your current campaigns.