Table of Contents

Creating a campaign

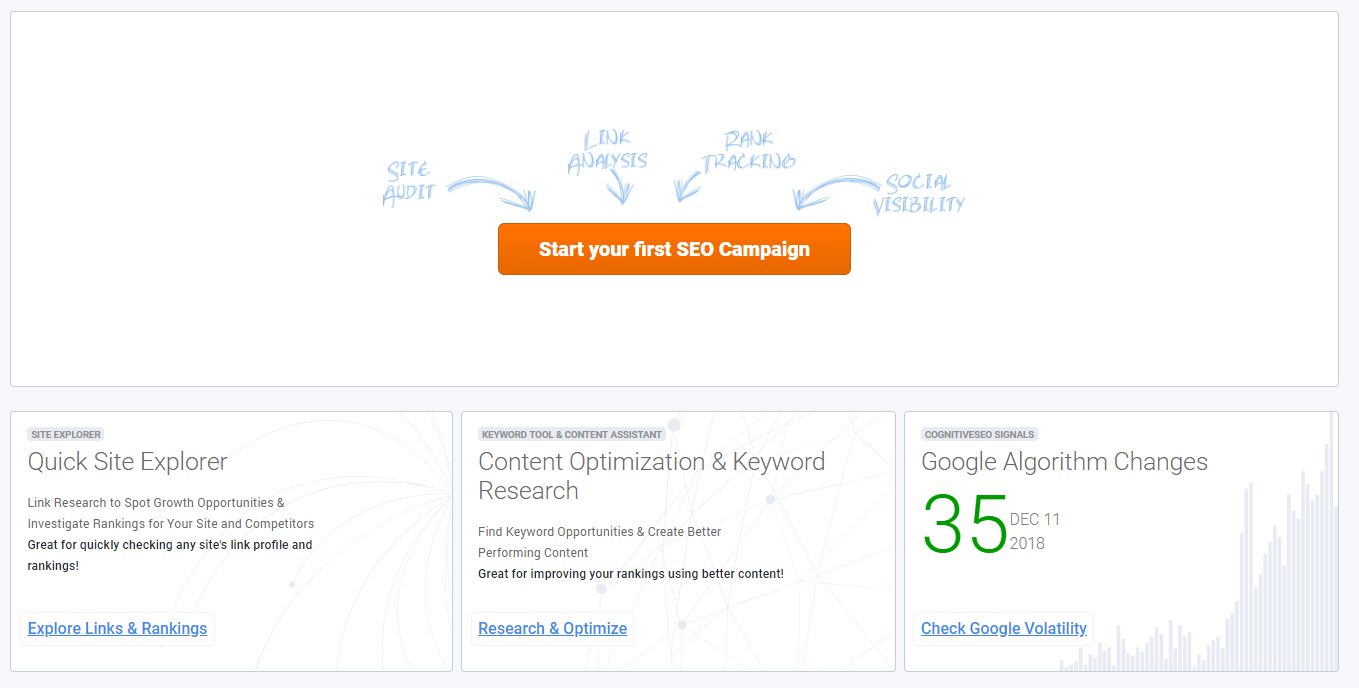

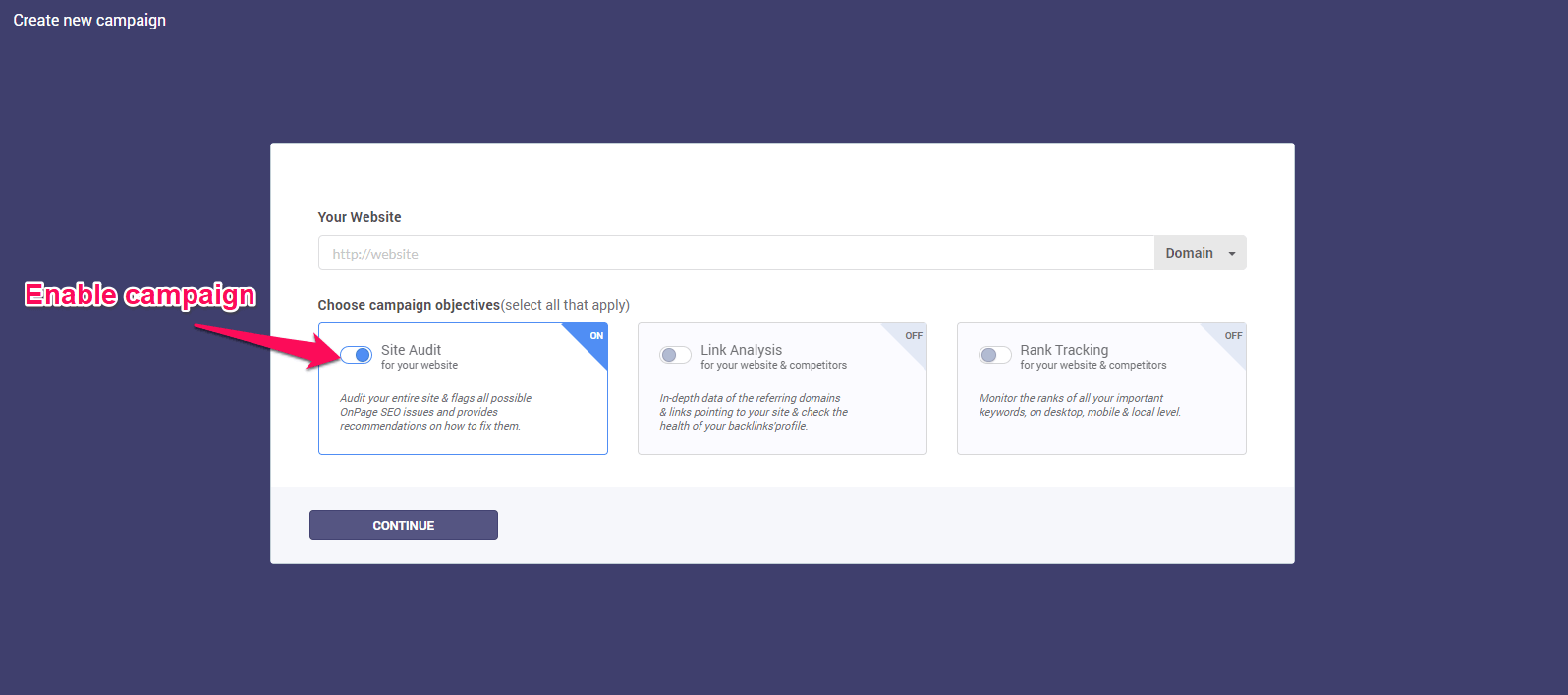

In order to create a campaign, please click on Start your first SEO campaign, right on the main Dashboard. The wizard will open and you’ll be able to select the module that you need.

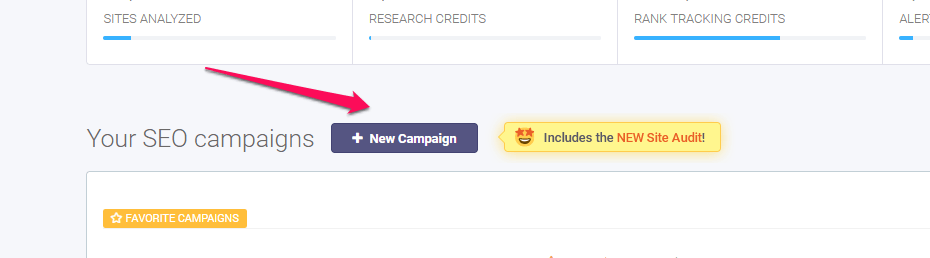

In case you have other campaign created and you want to create another one click on the +New Campaign button above your list of campaigns:

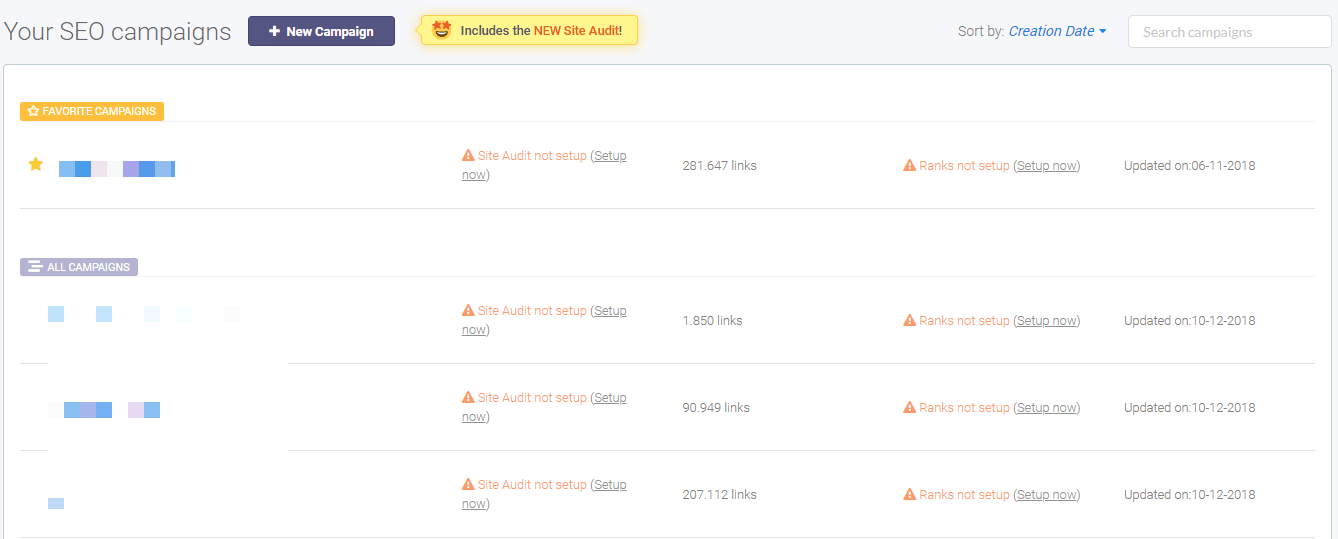

On the SEO dashboard are all the campaigns created by a user. For each campaign, there is the option to run the Site Audit, inBound Link Analysis, Rank Tracking and more. It is displayed what actions are performed on every campaign, be it on-site analysis, link analysis and so on.

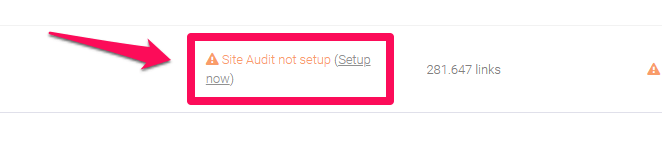

To run the Site Audit for a campaign, go to the desired campaign click on Setup now.

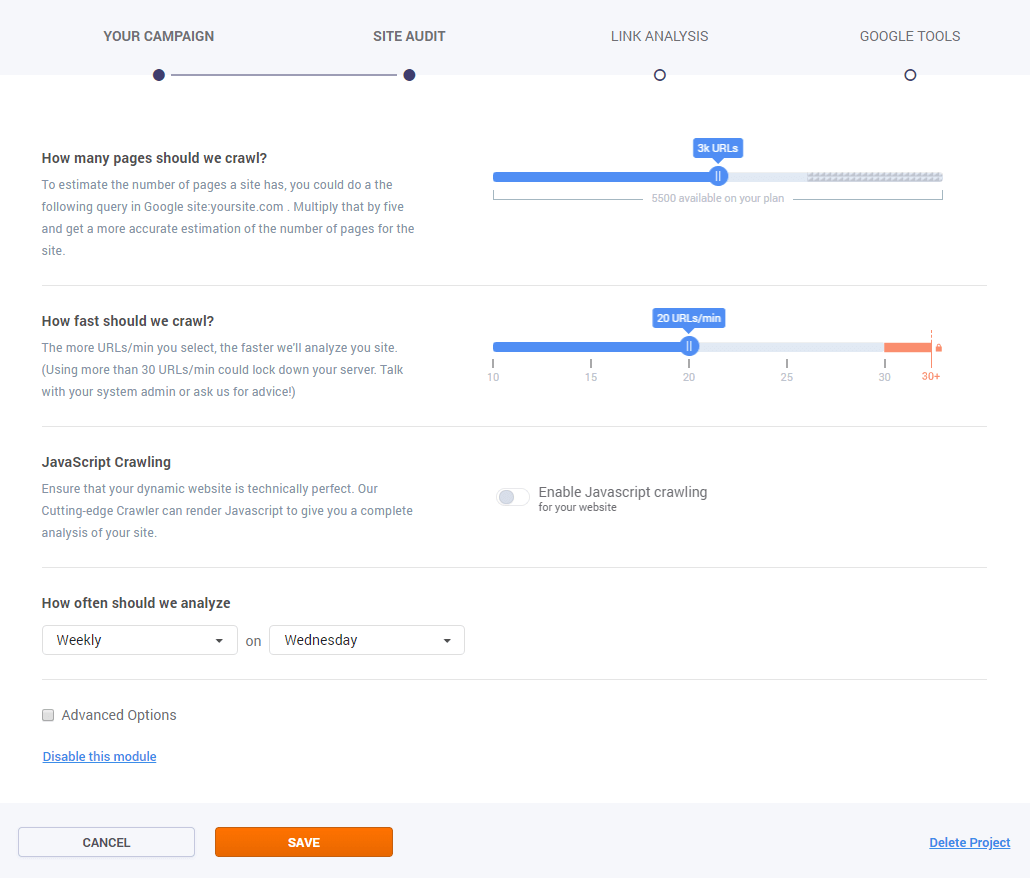

The Campaign creator will open to select the settings you’d like for your website. There are the next available options:

- the number of crawled pages: 100 – unlimited;

- the time needed for crawling: 10 URLs/min – 30 URLs/min;

- the option to enable JaveScript crawling;

- the desired elements for crawling

- the recrawled period.

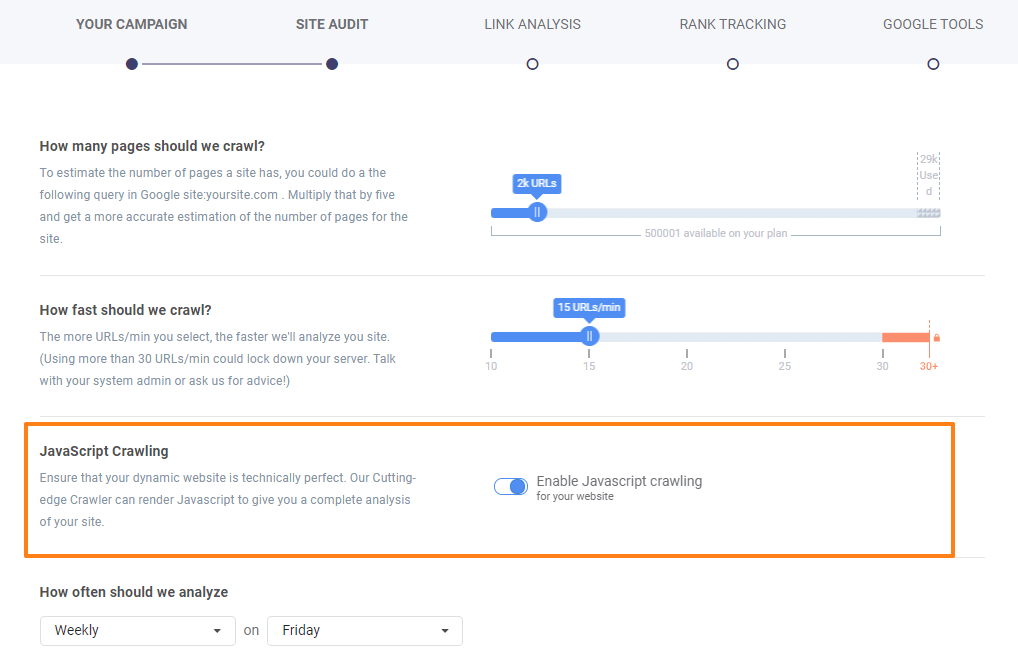

JavaScript Crawling

Use JavaScript crawler to analyze sites that are using JavaScript. Check the box to Enable JavaScript Crawling and verify if your dynamic website is technically perfect and there are no errors.

Advanced Options

There are Advanced Options for having a more accurate analysis of a website.

.

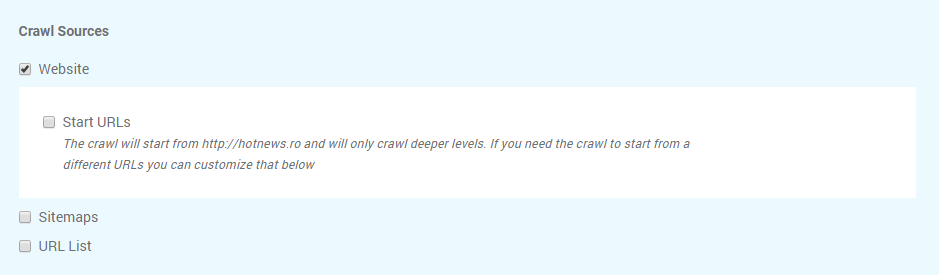

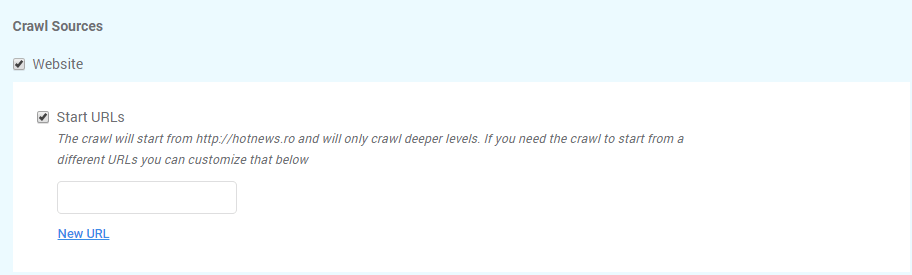

Crawl Sources

The default setting for cognitiveSEO is to crawl your website. There are two other options available, so it can be configured to also crawl XML Sitemap URLs, and/or a provided URL List.

Selecting the Crawl website option the tool will crawl all web pages to discover new URLs until every page on the website is crawled. In case you want to crawl pages that start with a different URL you can choose that by selecting Start URLs.

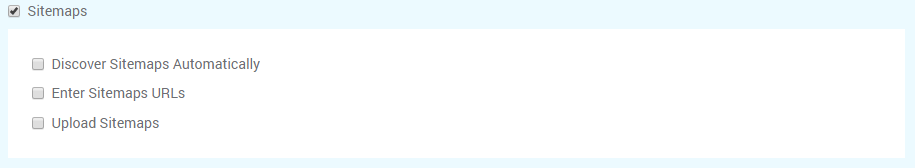

cognitiveSEO has the option to Crawl Sitemaps, which allows you to have a more in-depth audit. Please make sure that after you select the box for Sitemaps you chose the desired option for including the Sitemap in the Site Audit. You can either let the tool discover it automatically, enter the URL or uploaded from your computer.

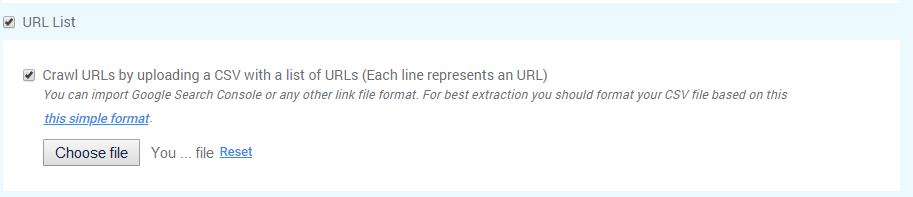

The third option, Crawl URL list can be performed by uploading a saved file from your computer. Make sure you select both boxes to save your options.

Typically URL Lists are used when you DON’T also crawl the website and are used to crawl a specific area or section of the site.

What to Crawl

Deciding what to crawl can be a little be tricky. There are lots of elements that you can choose from:

- Internal sub-domains have the next format: subdomain.example.com. One common example of subdomains is blog.example.com. If you want to analyze and crawl the subdomains that you have (in case you do) you need to select the box.

- Non-HTML files are image files, PDFs, Microsoft Office or OpenDocument files, and audio or video files stored on the webserver.

- CSS files are used to define a style for a single HTML page. CSS stands for Cascading Style Sheet and is used by web pages to help keep information in the proper display format.

- External links are links that point out to another website. For example, other websites/URLs linked to your website are external links. They send the visitor from your site to another site.

- Images. Here are included all the images in different formats, that are added to your website’s media library.

- Nofollow links have the nofollow tag that indicates to search engines that the links added on a page shouldn’t be followed by them.

- Noindex pages are pages blocked by the webmaster to appear in search engines. All the pages that have a noindex meta tag in the page’s HTML code, or by returning a ‘noindex’ header in the HTTP request can be crawled by the Site Audit if you select the box.

- JavaScript files contain all the HTML HEAD and BODY objects in the tags of an HTML page.

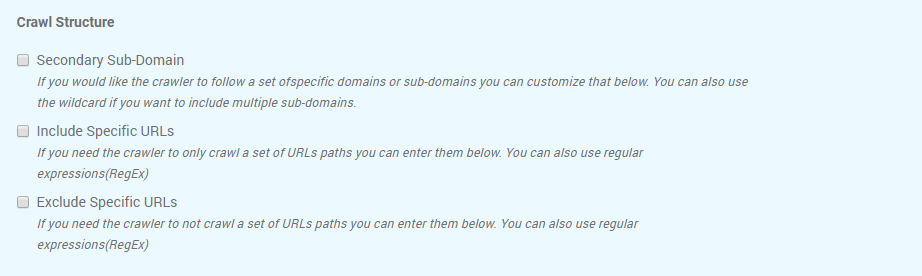

Crawl Structure

The Crawl structure has other options for crawling website’s data from specific sources, such as secondary sub-domain, or specific URL path. In case there are URLs that you don’t want to crawl, you can select the last box and let the tool know it should crawl all the links expect the selected path.

Crawl Settings

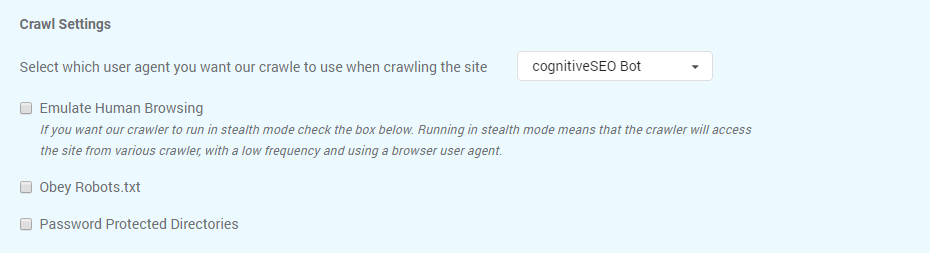

Select the settings that fit you for the website you want to audit, by looking at Crawl Settings and decide the method used for crawling.

You can change the user agent for crawling from the list available on cognitiveSEO:

- Applebot

- Bingbot

- Bingbot Mobile

- Chrome

- cognitiveSEO Bot

- Firefox

- Generic

- Google Web Preview

- Googlebot

- Googlebot – Image

- Googlebot – Mobile Feature Phone

- Googlebot – News

- Googlebot – Smartphone

- Googlebot – Video

- Internet Explorer 8

- Internet Explorer 6

- iPhone

The Emulate Human Browsing option means that the crawler will access the site from various crawlers, with a low frequency and using a browser user agent following a natural user behavior.

A robots.txt file is used to issue instructions to robots on what URLs can be crawled on a website. If you decide to Obey Robots.txt then all the URLs will be crawled according to the robots.txt file.

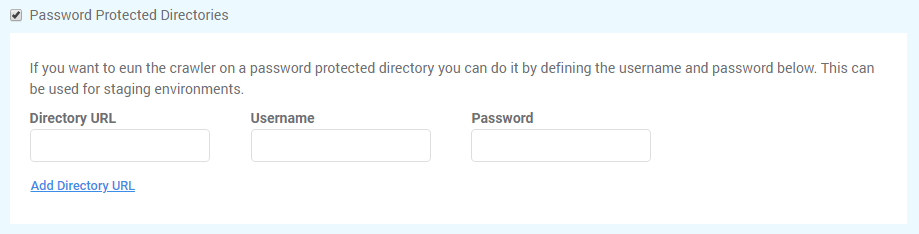

Use Password Protected Directories if you to run the crawler on a password protected directory you can do it by defining the username and password below. This can be used for staging environments.

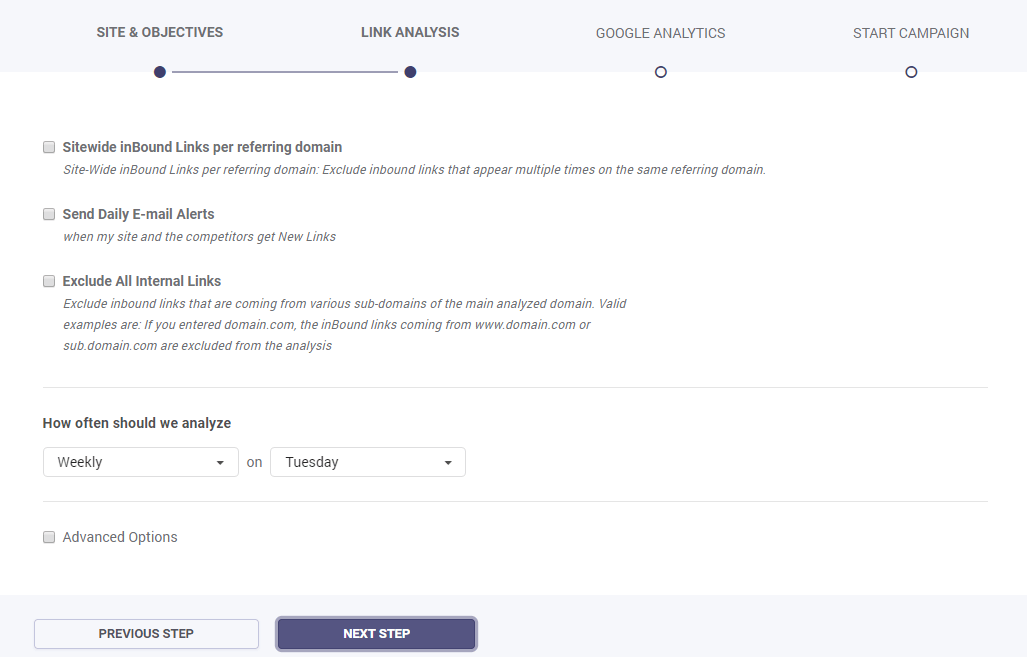

Link Analysis

Dialog Options

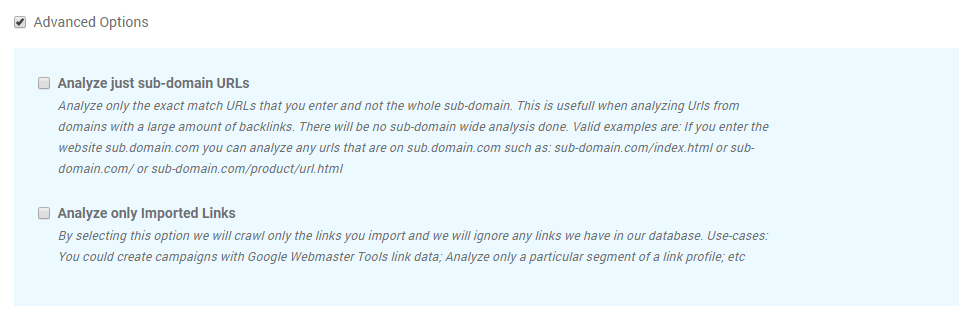

Advanced Options

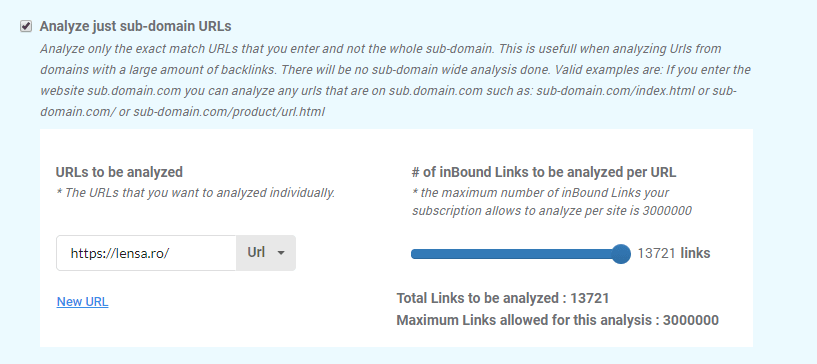

Just like in the case of Site Audit, Link Analysis module allows you to apply advanced settings to your campaign. In this case, you have the option to analyze just sub-domains URLs which means that you can crawl only specific links and not the whole sub-domain. In case you have a large website, with a high amount of backlinks, this option is a great fit. There will be no subdomain wide analysis done.

For example, your website is sub.domain.com and you can analyze any URLs that are on sub.domain.com such as sub-domain.com/blog.html or sub-domain.com/ or sub-domain.com/product/url.html and so on.

For situations when you want to analyze just a list of links, choose the Analyze only Imported Links option, which allows you to import a list of backlinks. The tool will crawl only the links you import and we will ignore any links we have in our database. For example, you could create campaigns with Google Webmaster Tools link data or analyze a particular segment of a link profile and so on.

Rank Tracking

Rank Tracking will help you analyze the trending evolution of your keywords’ positions.

Dialog Options

| What market would you like to monitor your marketing efforts? | This option allows you to select the rank tracking market. You can even track keywords locally, by city or zip code. |

| Enter the keywords you want to monitor (in Google, Bing and Yahoo) | In this box, you can enter your keywords, one keyword per line. If the account is set to daily analysis, the keyword analysis will be delivered in 24 hours. If the account is set to weekly analysis, the keyword analysis will be delivered in maximum one week. |

| Track keyword rankings on any sub-domain of the entered sites | If this option is checked, the selected keywords will be reported both for the main domain as well as for all sub-domains (e.g. www.auto.com, www.car.auto.com, www.motorcycle.auto.com etc.) |

| Send Instant E-mail Alerts | Get notifications on the email when your sites and your competitors’ ranks change. |

| How often should we analyze | This option allows you to select the frequency of the emails you receive. It can be daily or weekly. |

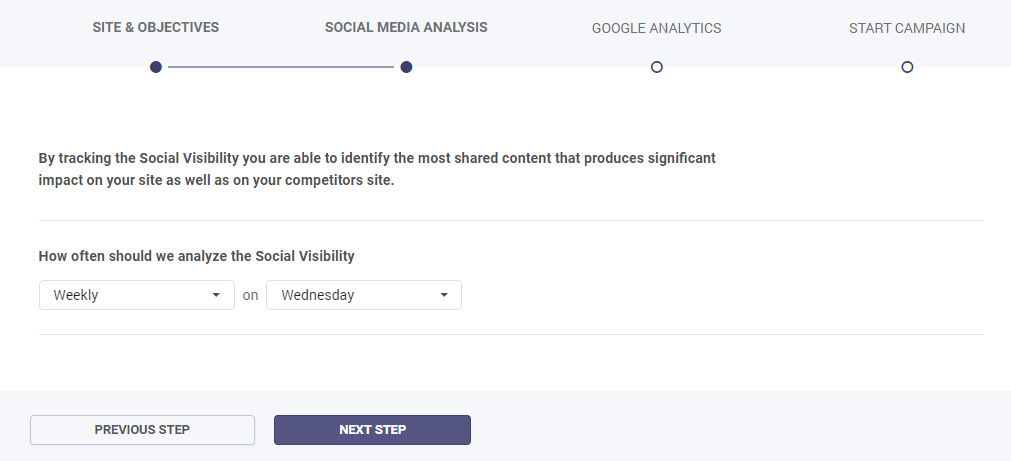

Social Media Analysis

This module will crawl and extract, on demand, the social shares for your site’s pages. It will allow you to understand what is your most shared content and create content that wins.

The tool will detect your social activity and show you information about your social signals. Make sure you chose the frequency of the social media crawling and select the Next Step.

Google Analytics

You can authorize your Google Analytics account to measure traffic and get the most relevant inbound marketing data for your website. Doing so, will give you extra information and can help you create better reports with the SEO data you get from the cognitiveSEO toolset.

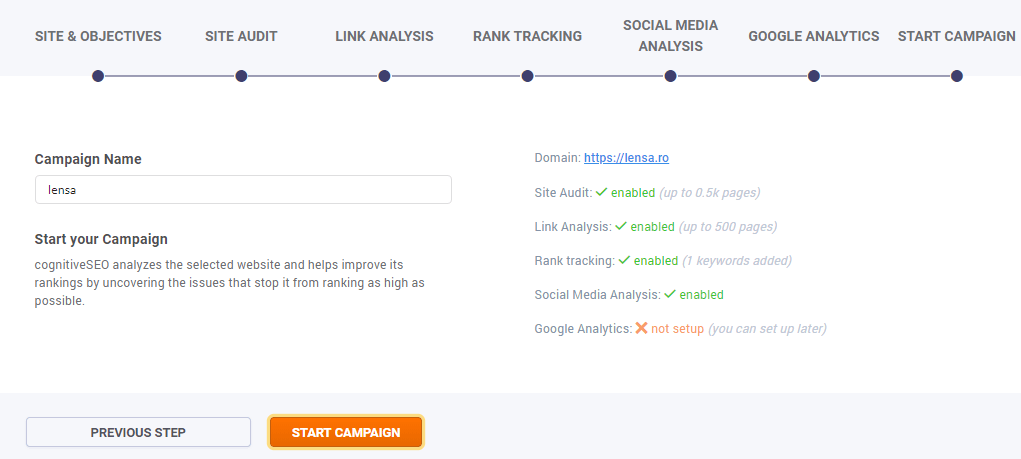

Start Campaign

Here you can introduce a name for your campaign. Now all you have left to do is press the Start Campaign button and you’re done!

In the foreground, the site will display a progress dialog. The process can take up to a few minutes, depending on the campaign complexity.

In the meantime, in the background, the links are extracted from the data providers. Our software aggregates the links, removes duplicates, and crawls them on demand. The link crawling is a very important element of the analysis, because when this operation is done on demand for each client, a set of unique algorithms specific to our system are applied. For example, we detect the website type (blog, web directory, forum etc.), the type of link (in content or out of content) and many other aspects that provide you with a very complete analysis and help you understand your profile and that of your competitors. Also, you will understand the strategy used by your competitors and how you can improve your own strategy, in order to obtain the best visibility in search engines.